Working Group 2 – Data Fusion

Systematic literature review of data fusion for lidar

Duration: 4-6 weeks.

Timing: February-March 2023.

Location: Leiden, the Netherlands

Total estimated grant: between 3000-4000 euros depending on the duration and travelling expenses

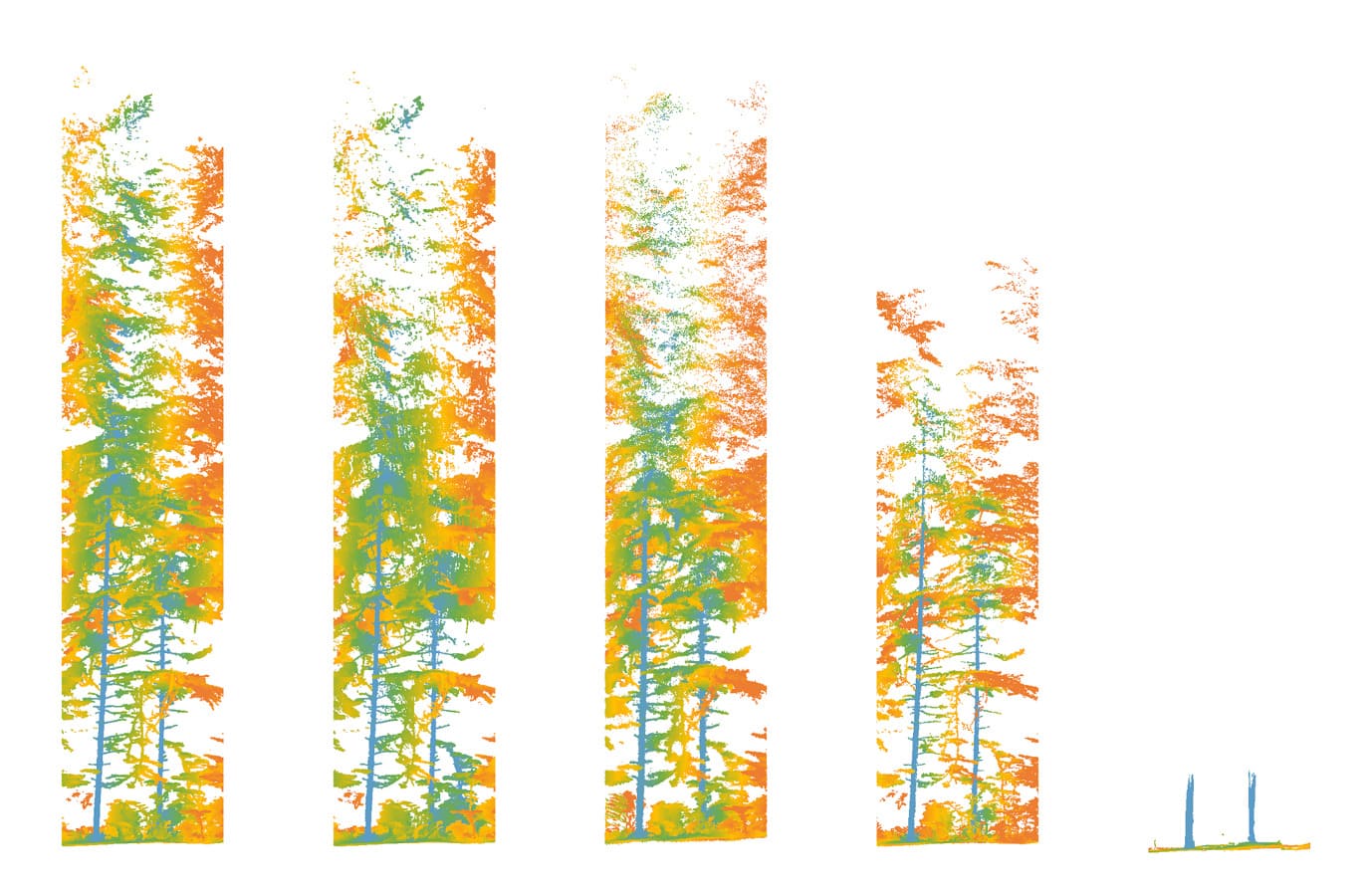

Description: This Short Term Scientific Mission is an important part of the WG2 (data fusion) activities. During this STSM the successful candidate will perform a systematic literature review of all literature related to data fusion for lidar with other data sources (e.g. optical, microwave, in-situ). The candidate will use specific search terms to make a first selection of scientific papers. Subsequently, the candidate will use a coding scheme to classify the information in the papers based on the abstracts information. After this classification a smaller selection of papers will be chosen that will be analyzed in detail, in part by the STSM candidate. The results of this STSM will be used as input for a manuscript on the state-of-the art of data fusion techniques for lidar data for forest structure. The candidate is expected to have 4-6 weeks of full-time availability for this project, experience with lidar, (some) knowledge of data fusion approaches, and ideally experience with a systematic literature review.

Rules of the COST Action: Short-Term Scientific Mission consists in a visit to a host organization located in a different country than the country of affiliation, so in this case in it is not possible to offer STSM to scientists affiliated in the Netherlands.

Please, if you are interested send your CV and motivation to following emails Suzanne Marselis (s.m.marselis@cml.leidenuniv.nl), Markus Hollaus (markus.hollaus@geo.tuwien.ac.at) and 3DForEcoTech (3dforecotech@gmail.com).

The deadline for applying is 18.12.2022